It’s Tuesday. This is how a humanoid AI robot gets delivered. figure.ai shipping dozens of these to their commercial customers.

Onward 👇

In today’s Brainyacts:

A growing crisis for state courts

A simple workflow for an AI Use Policy (with prompts)

Forget Google Search, use ChatGPT and other AI model news

Will DOGE's aim at Thomson Reuters hurt their legal AI business? plus more news you can use

👋 to all subscribers!

To read previous editions, click here.

Lead Memo 🙀📑 A Growing Crisis for State Courts

As part of my ongoing effort to showcase the initiative and insights of my students, I’m sharing an excellent and timely article by two of my law students, Kennith Echeverria and Parker McGuffey.

Their piece explores the darker implications of generative AI in state courts—how tools once meant to democratize legal processes can now be weaponized by bad actors to manipulate evidence, flood dockets, and overwhelm opponents. Given the extraordinary advancements released by major AI developers over the past two weeks, this analysis couldn’t be more pertinent.

The students offer compelling observations on how GenAI risks amplifying inequities and destabilizing judicial efficiency. They also propose forward-looking solutions, from blockchain-validated evidence to punitive sanctions, sparking an important conversation about the intersection of law, technology, and fairness.

This is a thought-provoking and pragmatic exploration of an emerging issue—one that demands our collective attention as the pace of AI adoption accelerates.

GenAI and the Legal System: A Growing Crisis for State Courts

Picture this: A state court, already overwhelmed by an overloaded docket, is suddenly inundated with motions, affidavits, and evidence—each demanding meticulous review. At first glance, everything appears legitimate. But closer inspection reveals something insidious: fabricated documents, falsified evidence, and disturbingly realistic deepfake videos. The culprit? Generative AI, wielded by bad actors intent on manipulating the legal system to secure victories at any cost.

While this might sound like the plot of a dystopian legal thriller, it’s a stark reality in today’s GenAI-driven world. Much has been written about GenAI’s potential to streamline legal processes and improve access to justice. Yet there’s a darker side—one that threatens to destabilize an already overburdened judicial system.

Structural Imbalances

GenAI democratizes tools once reserved for experts. With a few prompts, anyone can now generate legal documents, manipulate photos, or fabricate videos and audio recordings. While this seems like progress, it’s also a Pandora’s box. Bad actors have unprecedented power to exploit these tools.

State courts—where over 90% of lawsuits occur—are particularly vulnerable. Unlike better-resourced federal courts, most state courts operate with limited staff and outdated infrastructure. A flood of AI-generated filings that look legitimate on the surface could bury clerks and judges in an administrative nightmare. Verifying authenticity takes time and resources courts simply don’t have. The result? Delays, growing backlogs, and erosion of public trust in the judiciary.

Deterrence and the Inequity Problem

“Won’t bad actors face punishment for fraudulent filings?” In theory, yes. Courts can impose fines, sanctions, or other penalties. But this overlooks a critical reality: the cost of challenging these filings.

To dispute a fabricated document, the opposing party must first identify the fraud, file responsive pleadings, and bear significant legal costs. For well-funded litigants, this becomes a weaponized advantage: overwhelming an under-resourced opponent with a flood of AI-generated evidence or filings. The less-resourced party faces an impossible choice—settle on unfair terms or withdraw from litigation altogether.

This isn’t just inefficiency. It’s an amplification of existing inequities, tipping the scales of justice in favor of the privileged few.

On the Brink: Trust in the Judiciary

The economy, impartiality, and trust of the judicial system are at stake. Confidence in institutions like the U.S. Supreme Court is already at historic lows. While these concerns often focus on high-profile cases, similar issues—bias, inefficiency, and inequity—are seeping into lower courts. GenAI’s ability to exacerbate delays and disparities only deepens this crisis.

The integrity of our legal system hinges on adapting to this transformative technology. GenAI has immense potential to improve efficiency and access to justice. But without careful oversight, it becomes a Trojan horse, flooding courts with misinformation and undermining faith in the system.

Problem Identification and Solutions

The challenges posed by GenAI in state courts can be divided into two key dimensions:

1. Increased litigation volume due to the ease of generating filings.

2. Creation of falsified evidence—photos, videos, and recordings that appear credible but are patently false.

The first issue—higher caseloads—is less about AI’s abuse and more about adaptation. Courts have faced rising caseloads for decades due to technological advancements. While temporary strain is inevitable, expanded access to the courts is, in theory, a positive outcome. Bridging the gap between affordability and using the court system for legitimate claims that otherwise go unaddressed is one of the more hopeful views on AI.

The second issue, however, is more problematic: AI allows litigants to exaggerate legitimate claims or falsify evidence to gain an unfair edge. Here, the current system of sanctions fails. For high-income litigants, penalties like filing fees or covering legal costs are negligible compared to the potential rewards of a successful case.

A Possible Solution: Punitive sanctions proportional to the level of infraction and the litigant’s income could deter abuse. For the most affluent, penalties could include treble damages based on the amount sought, aligning the risk with the reward. While critics may see this as draconian, it mirrors penalties for other crimes of dishonesty and could inject capital back into court systems to fund necessary reforms.

Addressing Falsified Evidence

GenAI’s ability to create fake evidence—photos, videos, or audio—requires innovative solutions. One promising approach is implementing blockchain-like technologies to validate the provenance of evidence. Filers would proactively submit proof of legitimacy, shifting the burden of authenticity to the submitting party. If provenance can’t be established, the evidence is inadmissible.

This solution reduces costs for courts and opposing parties but comes with its own challenges: implementation requires significant time and funding—resources many state courts lack.

Alternatively, courts could develop AI-detection tools to flag fabricated content. However, this approach risks over-reliance. AI evolves exponentially, and detection tools could quickly become obsolete, leaving courts worse off than before.

A simpler solution might focus on education and training for judges and clerks to better recognize AI-generated evidence. While no single solution is perfect, the path forward requires collaboration across legal, technological, and policy sectors to anticipate and address these emerging challenges.

The Road Ahead

GenAI is transformative, and its potential to improve access to justice is real. But its darker implications—overwhelmed courts, inequitable litigation, and falsified evidence—cannot be ignored. As AI adoption accelerates, the legal system must evolve to ensure that innovation serves justice rather than undermines it.

This begins with identifying the risks, developing thoughtful solutions, and opening a dialogue among professionals across disciplines. The future of our courts, and the public’s trust in them, depends on it.

Automate your meeting notes

Condense 1-hour meetings into one-page recaps

Say goodbye to post-meeting data entry

Claim 90 days of unlimited AI notes today

Spotlight Get Off Go with Your AI Policy: A Simple Workflow

Regular readers know that I’ve trained and educated over a thousand lawyers, law students, and business professionals in the Pragmatic and Responsible Use of Generative AI. Along the way, I still come across a surprising number of firms and legal teams that don’t have any AI policy at all.

Now, I’m not here to push you into drafting one if it doesn’t align with your priorities. But I do think there’s value in at least going through the exercise. Even a simple, thoughtful statement on AI use is a good CYA move.

That said, many teams don’t know where to start. Or, they’ve put something together quickly—often generic and non-specific—because, let’s face it, AI is moving fast. That’s perfectly reasonable, but I want to make it even easier for you to get something meaningful on paper.

Here’s a simple workflow I’ve created to help you get off go:

Step 1. The Key Prompt: (copy-n-paste the following)

I would like you to help me draft a comprehensive AI Usage Policy for a law firm. I will answer a series of questions that you ask to collect the relevant information about the firm’s needs, risks, and goals. Based on my responses, you will produce a detailed, customized AI policy that addresses the following:

1. Key Risks and Concerns:

• Confidentiality and data privacy.

• Intellectual property concerns.

• Accuracy and reliability of AI outputs.

• Ethical considerations (e.g., bias and regulatory compliance).

2. Permitted and Prohibited Uses:

• Identify clear use cases for AI tools (e.g., legal research, drafting, administrative tasks).

• Highlight tasks that require strict human oversight or are prohibited.

3. Practical Implementation Steps:

• Monitoring and auditing processes to ensure compliance.

• Staff training and awareness programs.

• Defined roles and responsibilities (e.g., who oversees AI usage).

4. Benefits of AI Adoption:

• Highlight how the policy allows the firm to leverage AI responsibly to improve productivity, streamline tasks, and maintain client trust.

Step 1: Please ask me a comprehensive series of questions to gather all the information needed to create this policy.

Step 2: Based on my answers, produce a well-structured, actionable AI policy.

Step 3: (Optional): Provide an outline of a training or awareness program to help lawyers and staff adopt the AI policy responsibly.

If you’re ready, please begin by asking the first question to collect the necessary information.

Step 2. Answering the Questions: Copy and paste those questions into Word (or wherever you like), and answer them—but keep it crisp. If you find yourself getting long-winded (and we all do), re-prompt the AI to:

“Help me condense this answer into something pithy and clear, without losing its granularity or fidelity.”

Repeat this process for each question until you’ve got a solid, streamlined set of answers.

Step 3. Build the AI Policy: Once you have all your Q&A in place, paste it back into the chat window with a follow-up prompt:

“Use this to draft a comprehensive, actionable AI policy.”

And that’s it. You’ve got a working draft of an AI usage policy—clear, specific, and tailored to your needs.

If you already have an AI policy, this workflow doubles as a quick and efficient review tool. Use it to refine, update, or pressure-test what you’ve got.

Because here’s the reality: Whether you’re using AI actively or just starting to explore it, a policy—even a basic one—shows intention, builds trust, and sets the groundwork for responsible adoption.

I hope this helps you get moving!

AI Model Notables

► 🎅 OpenAI’s “12 days of OpenAI” continues:

🎁 Day 8 “Gift” - Search ChatGPT

ChatGPT Search is now globally available to free users, accessible via a globe icon in the compose bar.

Displays prominent webpage links, like Netflix or travel sites, before generating text-based responses.

Adds voice search via Advanced Voice Mode, offering responses in one of 10 preset AI voices.

Plus, ChatGPT’s mobile app now integrates with Apple Maps (iOS) and Google Maps (Android) for location-based search results.

🎁 Day 9 “Gift” - For Developers

If you are one - check out the news here.

► Try Grok2 for free on X (FKA Twitter). Premium and Premium+ users get higher usage limits and will be the first to access any new capabilities in the future.

► Google has unveiled Veo 2 and Imagen 3, two advanced AI models for video and image generation, pushing the boundaries of quality, realism, and prompt accuracy. They are also releasing Whisk - Instead of generating images with long, detailed text prompts, Whisk lets you prompt with images. Simply drag in images, and start creating.

► Most iPhone owners see little to no value in Apple Intelligence so far. I agree!! Do you?

News You Can Use:

➭ Will DOGE's aim at Thomson Reuters hurt their legal AI business?

➭ In an effort to address Elon Musk’s criticism of its for-profit shift, OpenAI has released emails showing Musk’s initial support for the company’s business model.

➭ ACLU warns police shouldn’t use generative AI to draft reports.

➭ Mark Zuckerberg sides with Elon Musk in his battle against the maker of ChatGPT. Read the letter from Meta here.

➭ Trump and SoftBank announce a $100 billion investment in the U.S. aimed at creating 100,000 jobs in AI and related infrastructure.

➭ Former OpenAI researcher and whistleblower who voiced concerns publicly that the company had allegedly violated U.S. copyright laws in building its popular ChatGPT chatbot was found dead at age 26.

➭ The Homeland Security Department is launching DHSChat, an internal chatbot designed to allow about 19,000 workers at the department's headquarters to access agency information using generative AI.

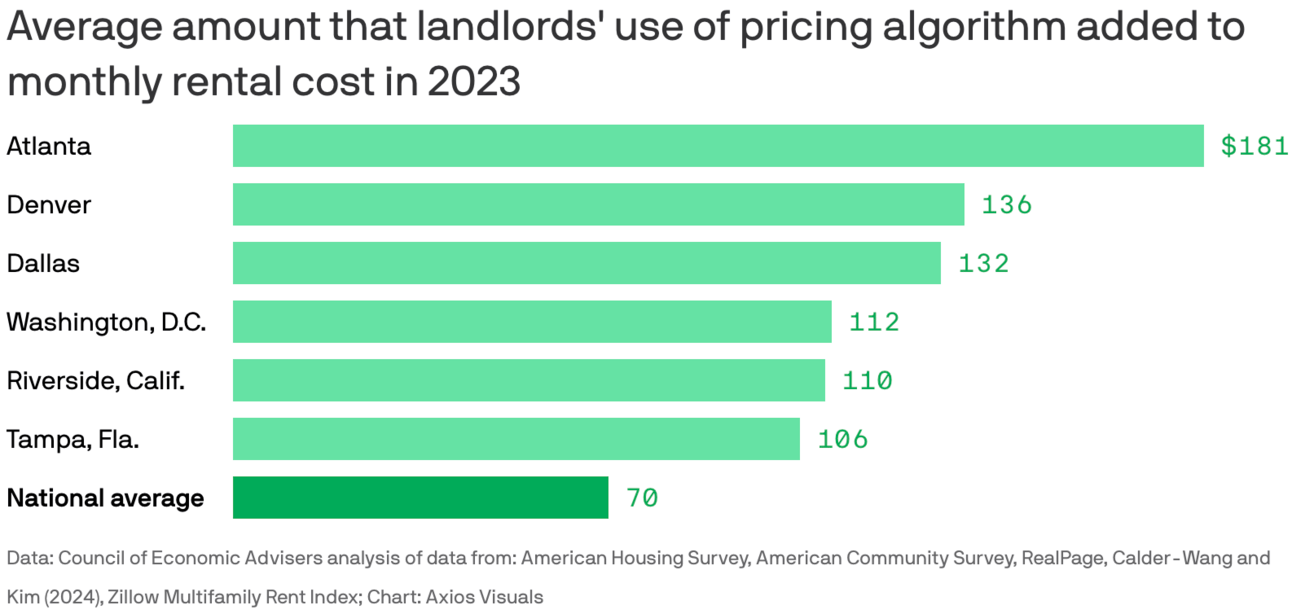

➭ Renters in the U.S. spent an extra $3.8 billion last year because landlords are using pricing algorithms, largely from RealPage's AI-powered pricing algorithm that uses data from participating landlords and property management companies to generate pricing recommendations for rental units.

➭ Job opening at OpenAI: Public Sector Lead Counsel.

Was this newsletter useful? Help me to improve!

Who is the author, Josh Kubicki?

Some of you know me. Others do not. Here is a short intro. I am a lawyer, entrepreneur, and teacher. I have transformed legal practices and built multi-million dollar businesses. Not a theorist, I am an applied researcher and former Chief Strategy Officer, recognized by Fast Company and Bloomberg Law for my unique work. Through this newsletter, I offer you pragmatic insights into leveraging AI to inform and improve your daily life in legal services.

DISCLAIMER: None of this is legal advice. This newsletter is strictly educational and is not legal advice or a solicitation to buy or sell any assets or to make any legal decisions. Please /be careful and do your own research.8