It’s Friday. Sotheby’s will be auctioning “AI God” - a painting done by the AI humanoid robot artist Ai-Da. Very interesting, but my questions are: Is Ai-Da the brush (tool) or the artist (creator/owner)? And who owns the rights to this image? I covered some of this in 238 | 👯Use your Zoom digital twin for your next meeting

But hey, if you have a spare $100k or so - you could become the owner of this painting.

Onward 👇

In today’s Brainyacts:

Election misinformation & AI disclaimer wordings matter

Napkin.ai walkthru - great graphics for PowerPoints & blogs

Two major models update their apps and other AI model news

High school students used AI to outline and gets barred from honor society - parents suing and more news you can use

👋 to all subscribers!

To read previous editions, click here.

Lead Memo

🗳️ 🐺 Election misinformation & AI disclaimer wording

In this essay, James Jordan and Joy Ruiz, law students of mine at Indiana University’s Maurer School of law, explore the growing influence of generative AI in politics, particularly focusing on deepfakes and their potential impact on elections. As AI technology becomes more advanced, it is increasingly used to create misleading content that can shape public perception. They examine both the dangers of this misuse and the role of disclaimers, which vary by state and AI platforms, in influencing how much trust or skepticism people place in AI-generated content. Through their analysis, they highlight the importance of transparency and neutrality in the wording of these disclaimers.

Deepfakes & Elections

As Generative AI models become more advanced, they also empower those who seek to spread outrageous deepfake content online. Not all deepfakes are harmful—many are created as parody, so outlandish that they are (1) clearly fake and (2) intended purely for comedic effect—these lighthearted creations provide a harmless laugh. However, not all deepfakes fall into this category. Instead of bringing humor, AI has given individuals with malicious intent a powerful tool to easily create and spread harmful deepfake content, often targeting public figures and posing significant risks to their reputations and credibility. This type of content becomes especially dangerous during critical moments, such as elections, when it can significantly influence public opinion and damage the reputations of candidates.

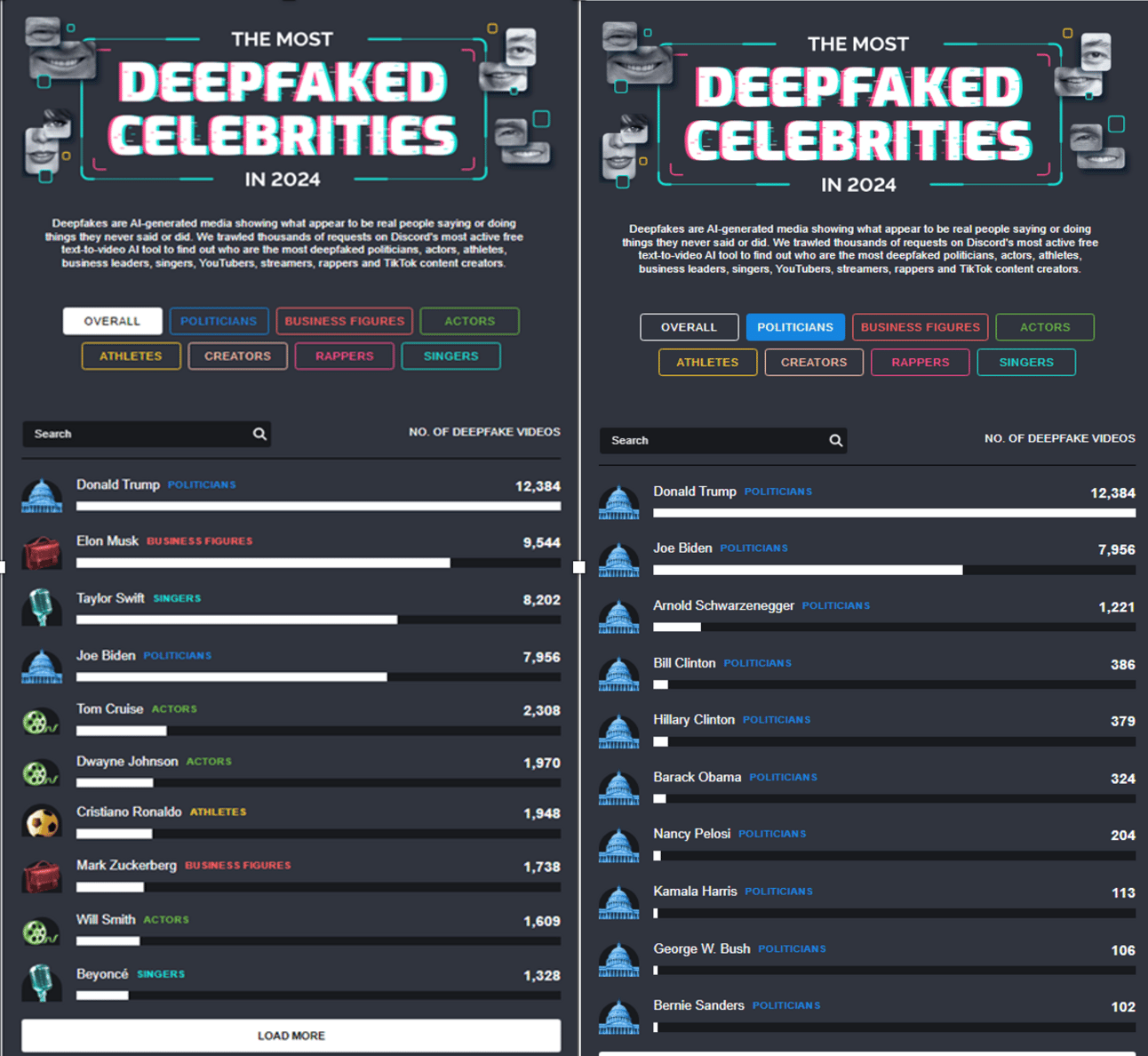

A recent study by Kapwing offers remarkable insight into how easily high-profile individuals can be deepfaked. Kapwing aimed to identify the public figures most frequently targeted by deepfakes in 2024, analyzing data from a popular AI video Discord channel that focused on 500 influential figures in American culture. Unsurprisingly, Donald Trump topped the list as the most deepfaked celebrity by a large margin, with Joe Biden ranking fourth. Interestingly, Kamala Harris had significantly fewer deepfakes compared to both Trump and Biden, which highlights how certain figures are disproportionately targeted.

So why does this matter? Well, many of these deepfakes are distributed throughout social media platforms, such as Instagram, Facebook, X, and YouTube, which allows them to reach a large audience. While many users of these media platforms can identify that they are viewing a deepfake, many cannot, as not all deepfakes are obvious satire. Social media platforms are not required to include any indication that a post is AI generated, which leaves that determination to the viewer.

With the U.S. general election just a couple of weeks away, it may be worthwhile to reflect on the impact generative AI has had on how we view this year’s major candidates: Donald Trump and Kamala Harris. Deepfakes of both candidates have swept the internet- some positive, some negative.

The following deepfake depicted Donald Trump in knee-deep murky water helping victims of Hurricane Helene:

This AI generated photo was reposted all over Facebook, Instagram, and other forums, with many users oblivious to its inauthenticity. The original poster captioned the photo with “I don’t think [Facebook] wants this picture on [Facebook]. They have been deleting it.” Some users asserted that the photo was real while other users pointed out that they could tell it was AI because the hands are distorted. The post has since been shared over 166,000 times.

On the other side of the political aisle, an almost two-minute long audio deepfake depicted Kamala Harris calling herself the “ultimate diversity hire.” The AI generated audio created by the user “Mr Reagan” was put over a real campaign video, blurring the lines between reality and fiction for many voters.

This deepfake was reposted by Elon Musk with the caption “This is amazing [laughing emoji]”. His post has since been shared over 217,200 times.

These fake images were created without the subject’s consent, but what if the candidates used generative AI to their benefit? Donald Trump reposted a deepfake photo of himself kneeling in prayer. With a large amount of his supporters being Evangelicals, this AI generated photo may elicit a favorable response. Does it matter to these voters whether the photo is real? Or does it only matter that the photo is in line with these voter’s expectations of Donald Trump?

Generative AI has been used in politics worldwide, not just in the U.S. A political candidate in India used generative AI to create a video of his deceased father endorsing him. This use of generative AI was more obvious because the subject of the deepfake had been deceased for several years; deepfakes of live people, however, are harder to distinguish.

AI Disclaimer Wording

Take a moment to ask yourself: In future elections, would you trust a political candidate that discloses the use of generative AI in their advertisements more, or less, than one that doesn’t? A recent study from the NYU Center on Tech Policy tells us that the answer is less. The following graph demonstrates the findings:

*The scale is 1-5: 1 as “extremely not trustworthy” and 5 as “extremely trustworthy”.

🅰️ This video has been manipulated by technical means and depicts speech or conduct that did not occur. [Michigan’s required label.]

🅱️ This video was created in whole or in part with the use of generative artificial intelligence. [Florida’s required label.]

A possible explanation for the discrepancy between the Michigan and Florida values is the wording of the disclaimer. Michigan’s disclaimer uses the word “manipulated” and the phrase “speech or conduct that did not occur”, whereas Florida’s disclaimer uses neutral words to explain that it was created “in whole or in part with generative artificial intelligence.” Skepticism is normal when new technology comes along, but neutrally-worded disclaimers and responsible outlets may be the key to the public becoming more comfortable with generative AI.

Deepfake software created by Kapwing provides a simple watermark stating “AI Generated on Kapwing” with the aim to encourage responsible deepfake creation and use. HeyGen has implemented similar safeguards. For example, users must submit a brief video message from the person being deepfaked, in which they explicitly consent to the use of their image and voice for creating the deepfake material. Without this verification video, users may not go forward with the deepfake.

HeyGen offers candidates an efficient way to spread their message. Instead of spending hours or days filming political ads, it quickly generates an authentic image and voice of the candidate, allowing candidates to focus more on policy and public appearances rather than ad production.

While bad actors may misuse generative AI for political purposes, this doesn't mean all generative AI is harmful; when used responsibly, it can offer safe and efficient ways for candidates to engage with voters during an election. As AI continues to prove its potential, it becomes a powerful tool that promotes efficiency, security, and alleviates creative fatigue.

Click here for a brief message from Mark Zuckerberg.

Get your news from the future.

The world's largest prediction market.

Stay ahead on politics, culture, and news.

Get insights on market changes.

Spotlight

🧑🏫 🤩 Great AI pics for your PowerPoints & Blogs

Napkin.AI - First Walkthrough Video

In this short video, I walk you through Napkin.AI, a brand-new generative AI tool that turns your text into visuals. Think of it as a fast way to create visual representations for presentations, blogs, or notes. It’s simple—paste in your text, generate visuals, and choose the styles you like. This is a quick, visual-first approach that can spice up your content. It’s currently in beta, and you can try it for free!

Key Features of Napkin.AI:

• Turns text into visuals: Paste your notes or documents, and the tool generates graphics to represent the content.

• Versatile export options: Export your visuals as PNG, PDF, SVG, or even shareable URL links.

• Different visual styles: Offers a variety of styles from doodles to professional-looking graphics.

• Perfect for PowerPoint & blogs: Easily embed the visuals into presentations or online content.

• Free to use (beta): Currently available for free with easy sign-up via Google.

Check out the video for a first look!

AI Model Notables

► Now, ChatGPT Plus, Enterprise, Team, and Edu users can start testing an early version of the Windows desktop app.

► Anthropic updates its apps- bringing more functionality to the mobile version.

► Nvidia just dropped a new AI model that crushes OpenAI’s GPT-4—no big launch, just big results.

► Amazon goes nuclear, to invest more than $500 million to develop small modular reactors.

► Apple launches new iPad mini with AI features.

► Also Apple is working on smart glasses and AirPods with built-in cameras for a potential release in 2027.

► OpenAI and Bain expand partnership to co-develop and sell industry-specific tools, get more corporations to use and integrate generative AI.

News You Can Use:

➭ Parents sue son’s high school history teacher over AI ‘cheating’ punishment. The legal complaint said using artificial intelligence to assist in crafting an outline didn’t violate school rules at the time.

➭ AI helped the US government catch $1 billion of fraud in one year. And it’s just getting started.

➭ White House considers export limits to additional countries of Nvidia’s and AMD’s AI chip following last year ban on selling chips to China.

➭ Hong Kong police have arrested 27 people for allegedly carrying out romance scams using deepfake face-swapping technology that swindled victims out of $46 million. This technology transformed the scammers’ appearances and voices into highly attractive females in terms of looks, attire and speech, making the victims trust them unquestioningly.

➭ Hilton has announced an exclusive partnership with Be My Eyes, an app designed to assist those who are blind or have low vision, to provide a more accessible hotel experience and to better recognize objects and navigate hotel room layouts.

Was this newsletter useful? Help me to improve!

Who is the author, Josh Kubicki?

Some of you know me. Others do not. Here is a short intro. I am a lawyer, entrepreneur, and teacher. I have transformed legal practices and built multi-million dollar businesses. Not a theorist, I am an applied researcher and former Chief Strategy Officer, recognized by Fast Company and Bloomberg Law for my unique work. Through this newsletter, I offer you pragmatic insights into leveraging AI to inform and improve your daily life in legal services.

DISCLAIMER: None of this is legal advice. This newsletter is strictly educational and is not legal advice or a solicitation to buy or sell any assets or to make any legal decisions. Please /be careful and do your own research.8